The main aim of creating the website is to see the SERP page when the searchers search for specific keywords. This process of search engines are commonly crawling, indexing the website or webpage, and finally ranking. Search Engines undergo a 200+ ranking factor by their algorithms.

To rank your website in SERP (Search Engine Result Page), you should have work on crawl accessibility of crawling and Indexing. When the page fails crawling then it will not be indexed by the search engine bot. So, it will not be shown on pages.

The SEO Services will be helpful to make instantly indexing the website for any kind of webpage or new blog.

For this, we have to know, first of all, the reasons behind not indexing, then only we can go for a solution, and finally, we can get the tips for instant indexing the website.

Reasons for not Indexing the Website:

- Error in Robots.txt

- Error in meta tags

- No proper site maps.

- More Redirection

- Canonical Tag not implemented

- Exceeded with crawl budget

- No proper breadcrumbs

Robots.txt

- This is the main file that can communicate with the crawlers what are the files to be indexed.

- If the file contains no follow or no index tag then the page will not be crawled by the crawler. So, the webpage is not indexed.

- If the file contains “ User-agent:*Disavow:/” it also tells that not index that particular site content.

- When the X-Robots tag is implemented in the HTTP header and it contains no index or no follow means then there is a chance of not indexing the website.

- .htacceess is an invisible file that can reside in WWW or public_html folder. When it is badly configured then there is a chance of not indexing the website.

Meta Tags

- When a meta tag contains no follow or no index in the source then it will not index the particular website or webpage.

- We have to remove the no-follow or no index tag in the source code.

SiteMaps

- When a sitemap is not updated in Google Search Console then the page is will not be indexed.

- We can check it Google Search Console tool and we re-submit the particular sitemap.

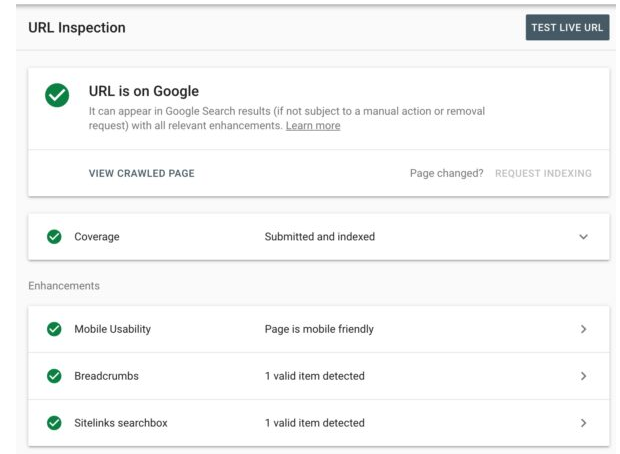

- Within the Google Search Console, there is URL Inspection, by these, we can inspect the URL and test the live URL.

Redirection

- When we set up long redirection or the page is not reachable means the webpage is not crawled by the search engine.

- It will also affect the user experience also.

- So, it must be removed temporarily or permanently using Google Search Console removals for temporary removal or disavow the webpage for permanent removals.

Canonical Tag

- A canonical tag used in the HTML header tag to tell that crawler which page contains the original content in case other pages contain duplicate content.

- Every website should have a canonical tag. It creates originality to the website.

- When it is not present on the website, then the crawlers take the page is duplicated.

- Google does not like duplicate content. It will love only unique and fresh content.

Exceeded with crawl Budget

- Google has more spiders to crawl but there is millions of website is waiting for crawling.

- Therefore, every spider enters the website with a particular limit of resources, and that is called a crawl budget.

- When a website has more redirection, then it will swallow the crawl budget before reaching the exact page.

- We can able to know the crawl section from Google Search Console, from that we can check the crawl stats.

- So we have to give first preference to more using authoritative sites. We can get more quality and relevant backlinks to pointing the website with good quality.

Breadcrumbs

- When a page does not have internal links then it is called an orphan page. So it will not be indexed.

- When a website having the correct navigating URL then it is called breadcrumbs.

- If the breadcrumbs are not proper then there is a chance to lost the webpage.

To indexing the website faster

When we want to index the website faster we must follow some kind of steps given below

1.Update the sitemap in Google Search Console.

- Submitting the site maps tells that that particular page is important to crawl.

- Maintain dynamic ASPx sitemap to avoid no-index.

2.Submit URL directly to Google

- When we search for Submit URL to google and the result will be displayed within an input bar.

- We can copy and paste the URL of the webpage then we can click submit for crawling and indexing.

- It only tells Google that there is new existence of the webpage.

3. Use Google Search Console to fetch and submit

- From Google Search Console we can directly request for re-crawl and re-index of the particular page.

- There is a chance to get instant approval.

4. Make High Domain Authority (DA)

- If we want our new page to be indexed fastly means then a better chance if our page rank is high.

- More quality backlinks and good content gain the page authority.

- Update the website and reach brand awareness.

5. Make fast page loading Speed

- When the page load speed is faster means the Googlebot can also crawl the page faster.

- When page load speed is slow then it will swallow the crawl budget

- If there is any problem with the hosting service we can change it to a better one.

- When we have a problem with the structure of the website or source code, we must optimize or remove it.

Tips for Instant Indexing the Website

- Add a link from another page on your site that already appears frequently in Google.

- Add an XML sitemap with the latest modified date in Google Search Console.

- Check the Robots tag and X-Robots tag

- Use RankMath plugin for Instant approval

The Google Instant API has generated automatically and sends the signal from API when we create a new page or new blog. Within 2 hrs the webpage will appear in Google.

For these, we need Google API and Rank Math plugin. We have to install and configure for instant indexing.

Google Search Console also gives updation like

- Page Experience

- Search Console Insights

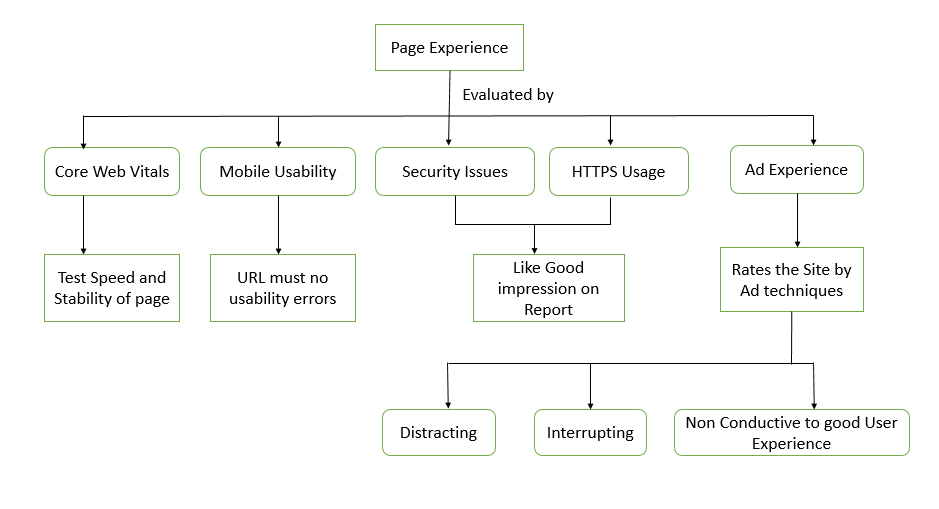

The page experience can be analyzed using the data of overall GSC and Google Analytics. It can easily be evaluated by the above diagram.

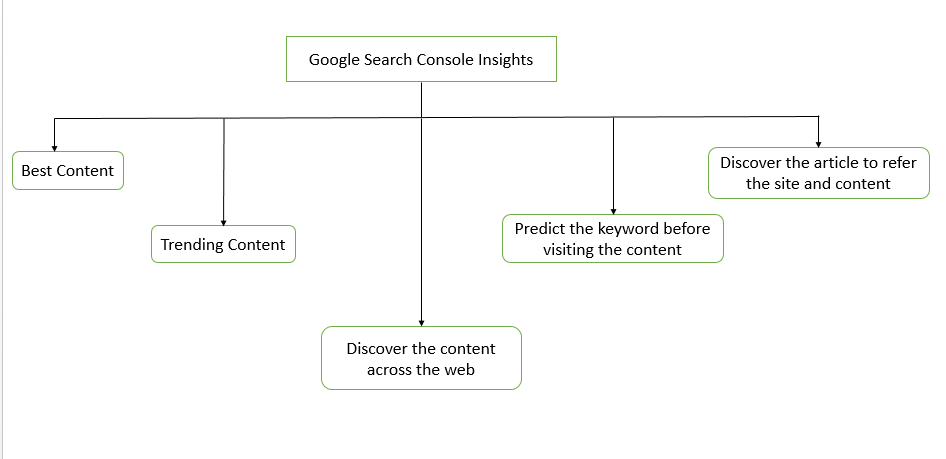

This is another big update coming soon from Google Search Console. From these, we can resolve the problem of the website and it can be useful for indexing also.

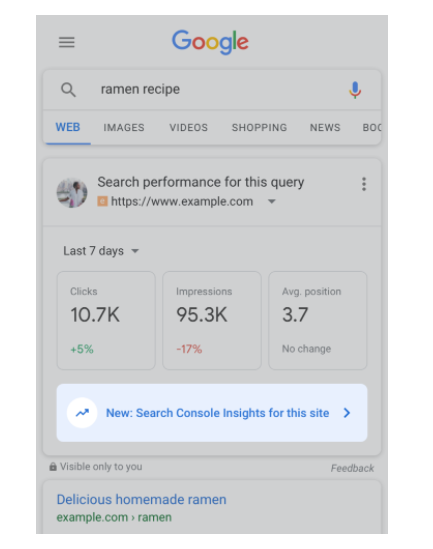

When this update is implemented then it looks like

We can easily analyze the performance from the above search console insights link.

Now, it can be easy to analyze the performance of the website and we can upgrade it.

Google is the best search engine widely used in India and it offers many free tools to give the best services to the users. The best way for crawling and indexing the website is using Google Search Console.